Kubernetes

I focus mostly on designing and building network infrastructure, but this often leads to situations where I need to have a solid understanding of other disciplines. In more recent years, I started hearing about Docker and Kubernetes from my co-workers who worked with compute platforms. I have a background in sysadmin work before I started focusing on networking, so understanding Docker(linux containers) wasn’t that difficult when I compared it to virtual machines. Kubernetes though, I couldn’t quite wrap my head around it all at first. I read multiple resources, videos, diagrams etc and was still confused. I decided that I needed to build a lab and get some hands-on experience before things would really “click” together in my mind.

I actually built this project back when everybody was mostly staying at home because of COVID. I usually try to travel internationally every year, but with COVID travel restrictions I ended up taking a “stay-cation”. I spent most of that time building / re-building my kubernetes cluster until I finally understood it. I think my point is, you might just need to do this yourself to really understand it, but hopefully my explanations can help jump start your learning.

Project Goals

- Learn Kubernetes

- Migrate my home servers from Docker to Kubernetes

Hardware Build

I was initially running some home servers on Docker hosted on my Synology NAS. The resources are limited on the NAS so I wanted to migrate everything to a separate piece of hardware. I wanted something small with low power usage and low noise. I considered building another SFF PC, but in the end I chose the Intel NUC platform.

I used the following hardware.

- Frost Canyon NUC 10

- 32GB RAM

- 500GB NVMe SSD

To be honest, this hardware has been kind of overkill for my current use. I originally looked into doing this project with Raspberry Pi’s, but there was none available for sale anywhere. I decided that I would build this lab on virtual machines instead. If you’re considering building on another platform, do some research on the hypervisor support for the chosen hardware. I found that ESXi in other platforms required an additional NIC, but the Intel NUC platform was supported by ESXi out of the box without an additional NIC or drivers.

Hypervisor / VM Builds

I chose VMWare ESXi 7.0 as my hypervisor because I’m already familiar with the platform. I read up a bit on Proxmox also, but decided that for my home use, the free ESXi license would suffice. You can check out how to get a free license for ESXi here.

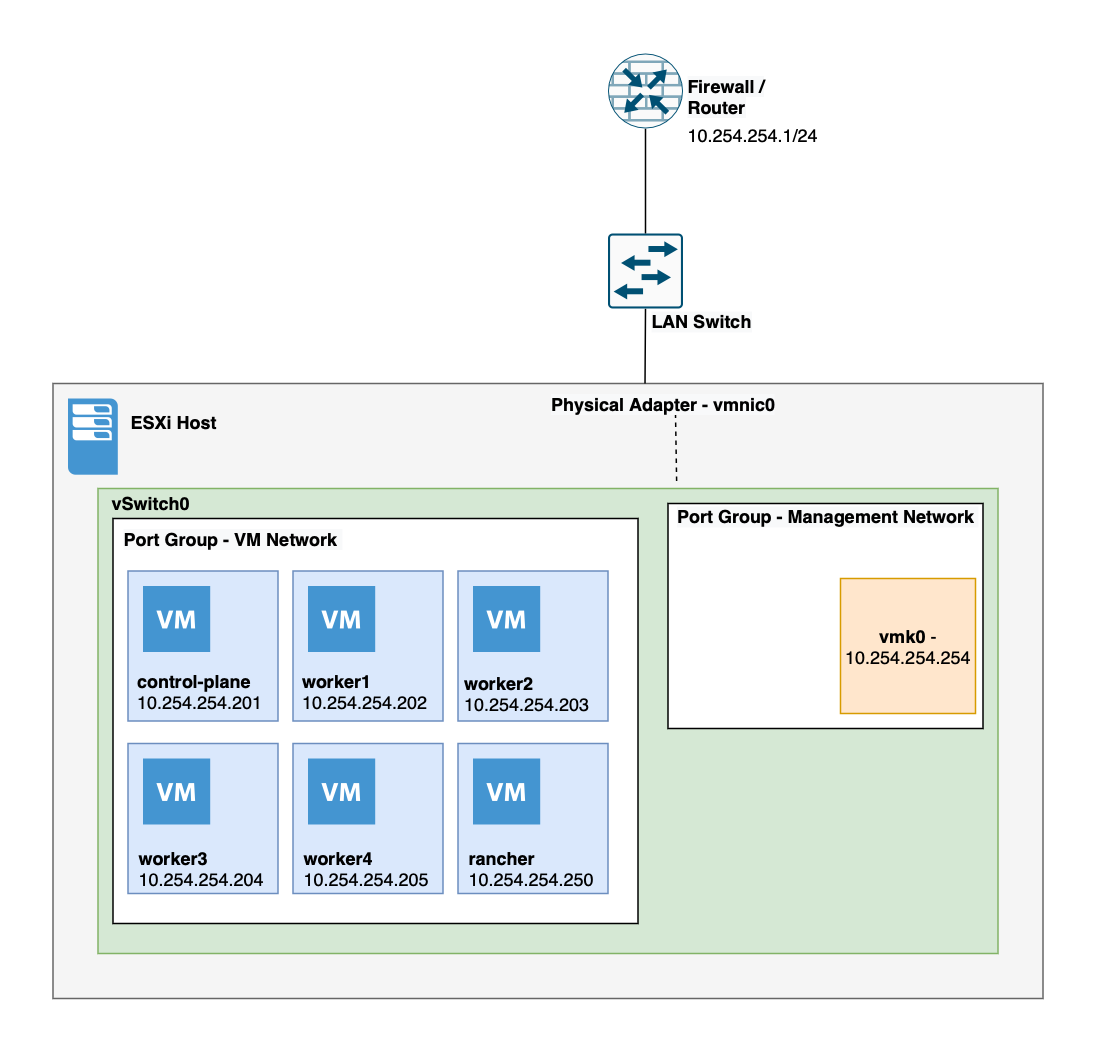

My VM layout ended up looking like the following diagram. Ignore the VM names for now as they relate to the kubernetes setup. I built 6 Ubuntu VMs in total. Everything was built in the same subnet. I won’t go into the details of how to build the VMs, but I deployed Ubuntu 20.04 LTS for this build. At the time of build, Ubuntu 22.04 LTS wasn’t released yet. Once I had the VMs all up and running, it was time to move on to the Kubernetes build.

K3S Install

Initially when I started this project, I had planned to use k8s. I wrote ansible playbooks to automate all my VM setup and deploy k8s. I got the control-plane and worker nodes running, but I still didn’t quite understand how to use it for running my servers. I needed a GUI so I could click around and get an understanding of how things worked. I did some research and found Rancher for managing Kubernetes cluster, but also found k3s. After further research on k3s, I found that it better fit my needs and scrapped my k8s build and started over with k3s. Compared to k8s, k3s was also super easy to setup. I also found that running Rancher helped me understand Kubernetes

I started by building the control-plane node.

curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" INSTALL_K3S_CHANNEL=latest sh -s - --disable servicelb --disable traefik

This will install k3s and disable servicelb(Klipper) and Traefik. I planned to use MetalLB so Klipper must be disabled. I also planned to use Nginx instead of Traefik, so I disabled Traefik with the install. This is my personal preference as I wanted to use Nginx, but I might try out Traefik later.

To join the cluster, I needed the token from the control-plane node. There’s a file that you must view with root/sudo user to get the token.

sudo cat /var/lib/rancher/k3s/server/node-token

On every worker node, I ran the following to join the cluster.

curl -sfL https://get.k3s.io | INSTALL_K3S_CHANNEL=latest K3S_TOKEN="<TOKEN>" K3S_URL="https://10.254.254.201:6443" sh -

After a few minutes, the worker nodes should have joined the cluster. This can be verified by running ‘kubectl get nodes’ on the control-plane node.

Rancher

Since I had a very basic understanding of Kubernetes, I installed Rancher as a GUI to admin my cluster. The install was farely straight forward.

First step is to make some directories for Rancher and create a config.yaml file.

mkdir /etc/rancher

mkdir /etc/rancher/rke2

cd /etc/rancher/rke2

vim config.yaml

In the config.yaml file, I filled in the following information including a shared secret.

token: <SHAREDSECRET>

tls-san:

- 10.254.254.250

After the configuration is setup, Rancher is installed. After install, the service needs to be started and the access token generated.

curl -sfL https://get.rancher.io | sh -

systemctl enable rancherd-server.service

systemctl start rancherd-server.service

rancherd reset-admin

I browsed to https://10.254.254.250 and logged in with the info. Once logged in, I was able to add my cluster to Rancher.

Next Steps

This post is already getting long, so I think I’ll split off my app deployments and Kubernetes specifics to another post. At this point I had my cluster up and running and a GUI setup. These steps are actually notes I wrote after many iterations of trying to get this to work. Initially I just started with k3s with no customizations and then I found that I wanted MetalLB so I had to tear it all down and start again. After I had it all running, I needed to figure out ingress services, and how to run deployments, organize namespaces etc. Hopefully somebody out there finds some value in the time I took banging my head against the wall until I got this into a working state. When I have some time, I’ll post a follow up on Kubernetes deployments, networking, etc.

Comments